Hidden SEO Website Design Mistakes That Kill Your 2025 Rankings

A shocking 96.55% of web pages get absolutely zero traffic from Google. Think about that for a moment. Businesses pour thousands of dollars into stunning website designs, yet hidden SEO errors quietly destroy their chances of ranking well. Google now evaluates over 200 factors when ranking a website, making SEO website design incredibly complex.

The mobile internet landscape has exploded, with 6.47 billion active users in 2023. Building an SEO-optimized website demands meticulous attention to technical details, mobile performance, and user experience. The problem? Most websites contain serious design flaws that damage search rankings without owners noticing.

What mistakes are killing your rankings? This guide uncovers the most harmful SEO website design errors affecting 2025 rankings and provides straightforward solutions to fix them. You'll learn how to identify and correct everything from JavaScript rendering issues to content layout problems that secretly sabotage your search visibility.

Technical SEO Website Design Flaws That Damage Rankings

Technical website design issues silently wreck your search rankings without warning signs. While content problems jump out immediately, technical SEO flaws hide beneath the surface, secretly sabotaging your seo website design efforts through invisible mechanisms.

Hidden JavaScript Rendering Issues

Modern websites depend on JavaScript for interactive elements, creating major SEO challenges. Google handles JavaScript applications in three separate phases: crawling, rendering, and indexing. This process means JavaScript-rendered content must clear extra hurdles to appear in search results.

Here's the critical issue: Google crawls and renders pages differently. When Googlebot fetches a URL, it first checks if crawling is allowed before adding it to a completely separate rendering queue. This separate system often creates delays between crawling and indexing your content.

Single-page applications (SPAs) heavy on JavaScript frequently report incorrect HTTP status codes. Rather than sending proper 404s for missing pages, they often return 200 OK status codes. This confuses search engines, causing error pages to be indexed as normal content and diluting your site's quality signals.

JavaScript-heavy sites using client-side rendering (CSR) face another common problem. The HTML generates in the browser alongside JavaScript components, requiring complete rendering before HTML becomes available to crawl. If Google skips rendering a page in the queue, crucial elements like internal links, metadata, and even basic content stay invisible to search engines.

Improper Implementation of Structured Data

Structured data provides a standardized format for page information, helping search engines understand content context and classification. When done right, it enables rich results that boost engagement and click-through rates.

Many websites get structured data wrong. A common mistake? Adding markup for content users can't see. Google explicitly warns against creating blank pages just to hold structured data or adding markup about information invisible to users. These practices can trigger manual actions, causing Google to completely ignore your structured data.

Sites often fail to validate their structured data implementation. Google recommends using the Rich Results Test to check what Google rich results your page can generate. The Schema Markup Validator offers an alternative, testing all schema.org markup without Google-specific validation.

Technical issues plague structured data effectiveness too. Sites using AngularJS must ensure schema markup gets added into the header, passed through the DOM, or uses third-party scripts for proper rendering. JavaScript-based dynamic content creates another problem - inconsistencies between what appears on the page versus what's in the structured data markup.

Excessive DOM Size and Page Bloat

Excessive DOM size ranks among the most overlooked technical SEO problems. According to Lighthouse, a page's DOM becomes excessive at 1,400 nodes, with warnings starting at 800 nodes. This bloat hurts your site in multiple ways.

Large DOM trees often contain many nodes not visible when users first load the page, unnecessarily increasing data costs and slowing load times. As users interact with your page, the browser must constantly recalculate positions and styling of nodes. A bloated DOM combined with complex style rules drastically slows rendering and interactivity.

Memory performance takes a hit with large DOMs too. When JavaScript uses general query selectors like document.querySelectorAll('li'), you unknowingly store references to countless nodes, overwhelming device memory. Mobile users suffer most, creating poor experiences on devices that generate most of your traffic.

What causes DOM bloat?

Poorly coded plugins or themes that generate excessive HTML

DOM nodes produced by unnecessary JavaScript

Page builders that create bloated code structures

Text copied from rich text editors like Microsoft Word

How can you fix these issues? Try flattening your HTML structure, using CSS Grid and Flexbox for layouts instead of complex nested structures, and implementing lazy loading for offscreen content. Regular audits of third-party scripts help identify and remove widgets adding unnecessary DOM nodes.

Fixing these technical SEO website design flaws creates a foundation for better rankings in 2025's complex search landscape. Remember, even the best content can't overcome technical barriers that block search engines from properly accessing, understanding, and ranking your site.

Mobile-First Design Mistakes Hurting Your SEO Optimization

Mobile-first indexing has completely changed the SEO game. With 60% of global internet traffic now coming from mobile devices, focusing on desktop-first design is a recipe for ranking disaster. Google made this shift official in September 2020, when they began predominantly using the mobile version of your content for indexing and ranking. Yet countless sites continue making critical mobile design errors that tank their rankings.

Viewport Configuration Errors

Missing the viewport meta tag? This common seo optimization mistake creates immediate problems. Without this tag, mobile devices render pages at desktop width (typically 800-1024 pixels) and shrink everything down, making your text practically unreadable. Users then face the frustrating choice of scrolling horizontally or pinching-and-zooming just to navigate your content—sending bounce rates through the roof.

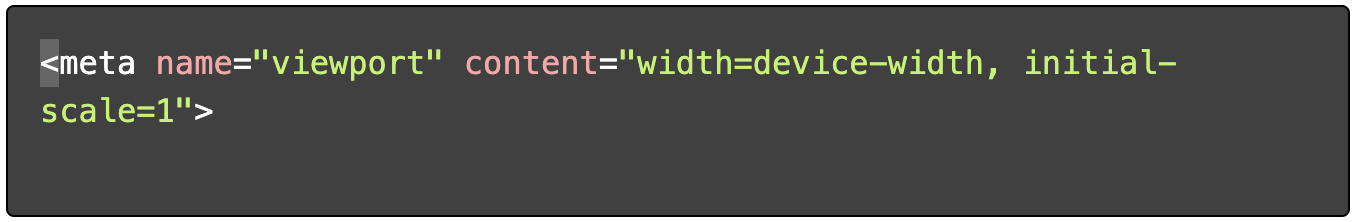

Here's what a properly configured viewport tag should include:

The "width=device-width" part tells browsers to match the page width to the device's screen, while "initial-scale=1" keeps everything properly displayed in landscape orientation. But don't stop at just adding this tag—make sure your design actually works with it by avoiding fixed-width elements and using relative width values in your CSS.

When you neglect proper viewport configuration, mobile users struggle with your site. Google notices this struggle and interprets it as poor content value, damaging your rankings in mobile searches.

Touch Element Sizing Problems

How do mobile users interact with your site? Through touch, of course. That's why properly sized buttons and links are essential for seo friendly website design. Too-small touch targets create what UX designers call "rage taps"—those infuriating moments when users must tap repeatedly just to hit a tiny button.

Did you know element position on screen dictates ideal sizing? Research shows:

Elements at screen top: Minimum 11mm (42px)

Elements at screen bottom: Minimum 12mm (46px)

Elements in screen center: Minimum 7mm (27px)

WCAG 2.1 AAA accessibility standards require all targets measure at least 44×44px, except in flowing text. This makes perfect sense considering 49% of users handle their device with just one hand, making thumb reach and accuracy major usability factors.

Google specifically flags "touch elements too close together" as a mobile usability issue in Search Console reports. These errors happen when interactive elements are either too small or spaced too closely, causing accidental clicks that frustrate users and drive up bounce rates. Since user experience directly impacts rankings, these seemingly small design issues can seriously harm your website seo.

Inconsistent Mobile-Desktop Content

Are you serving different content on mobile and desktop? Big mistake. Content parity between versions is absolutely essential now that Google primarily crawls with smartphone agents. Yet many sites still offer watered-down mobile experiences with less content and fewer internal links than their desktop counterparts.

Your site should deliver a consistent experience regardless of device. Hiding substantial text blocks on mobile while displaying them on desktop directly damages your seo optimization efforts. Remember this critical point: only content shown on the mobile site gets used for indexing.

For effective SEO implementation, make sure you have:

Equivalent content across both versions (text, images, multimedia)

Same headings and metadata on mobile and desktop

Consistent structured data implementation

Identical internal linking patterns

While you can adapt design elements for mobile (like placing content in accordions or tabs), the underlying content must remain the same. Otherwise, when mobile-first indexing applies to your site, any content hidden exclusively on mobile vanishes from Google's index, potentially causing major traffic losses.

Fixing these mobile-first design issues creates a foundation for better search rankings while simultaneously improving user experience—two factors that work together in modern seo website design.

Page Speed Killers in Modern Website Design

Page load speed makes or breaks both user experience and search rankings. Half of all visitors expect pages to load in under two seconds, with bounce rates skyrocketing when sites take longer. Yet countless websites struggle with performance issues that cripple their seo website design efforts. Let's tackle the most dangerous speed killers that might be hurting your rankings.

Unoptimized Image Delivery

Did you know images make up roughly 50% of all bytes on the average webpage? They've grown almost 5x on desktop and a shocking 7x on mobile pages since 2011. This explosion makes image optimization absolutely critical for website seo performance.

One mistake I see constantly: uploading a single massive image to serve across all devices. While this seems logical—bigger images won't lose quality when displayed smaller—it's terribly inefficient. Your users waste bandwidth as every single pixel must be processed regardless of display size. The fix? Implement responsive images that deliver appropriately sized files based on viewport dimensions.

Poor image optimization directly hits your Core Web Vitals, especially Largest Contentful Paint (LCP) and Cumulative Layout Shift (CLS). When you skip proper width and height attributes, browsers can't allocate space in advance, causing those annoying layout shifts that wreck CLS scores. Meanwhile, that big unoptimized hero image likely becomes your LCP element, and its slow loading seriously damages this crucial metric.

Try these image optimization techniques:

Convert to next-generation formats like WebP, which load faster than traditional JPEG or PNG formats

Implement lazy loading to defer offscreen images until needed

Properly size images to stop serving unnecessarily large files

Use image compression to shrink file size without sacrificing quality

Render-Blocking Resources

Render-blocking resources force browsers to wait before displaying any content, essentially holding your page hostage until specific files are fully downloaded, parsed, and executed. The usual suspects? CSS stylesheets without a disabled attribute or appropriate media query, and JavaScript files sitting in the document head without defer or async attributes.

Each render-blocking resource adds latency, as browsers must pause rendering while processing these files. JavaScript-heavy sites suffer the most—research confirms JavaScript is typically the main culprit behind slow, unresponsive pages.

To fix render-blocking CSS, implement critical CSS by extracting and inlining styles needed for above-the-fold content directly in the document head. Then load your remaining styles asynchronously. For stylesheets targeting different screen sizes, use media attributes to prevent unnecessary blocking—browsers only block rendering for CSS that matches the current device.

JavaScript optimization requires the async and defer attributes. Both prevent scripts from blocking HTML parsing, though they work differently: async loads and executes scripts as soon as possible regardless of HTML parsing status, while defer patiently waits until HTML parsing completes before execution.

Server Response Time Issues

Server response time measures how long it takes your server to deliver the necessary HTML to begin rendering. Google wants this under 200ms to avoid triggering performance warnings. Miss this target, and you create a critical bottleneck that undermines all your other seo optimization efforts.

Many factors contribute to slow server response: inefficient application logic, poorly optimized database queries, inadequate routing, resource starvation, or simply choosing the wrong hosting provider. Even with flawless frontend optimization, a slow server foundation guarantees poor performance.

To identify the real problem, measure your Time to First Byte (TTFB)—the time between a browser's initial request and receiving the first byte of response. High TTFB values typically point to server-side issues needing immediate attention.

The solutions? Implement caching, optimize database connections, reduce redirects, select appropriate hosting, and keep server software updated. For high-traffic websites, consider server infrastructure upgrades or implement a Content Delivery Network (CDN) to distribute load and deliver content from servers geographically closer to your users.

Fix these critical page speed killers, and your seo friendly website design will deliver dramatically better user experiences while winning favor from search algorithms that increasingly focus on performance metrics when deciding rankings.

Information Architecture Errors That Confuse Search Engines

Every successful website stands on a well-planned information architecture that guides users and search engines through your content. Sadly, many seo website design efforts collapse due to structural flaws that leave search algorithms scratching their digital heads, tanking your rankings regardless of content quality.

Poor URL Structure Implementation

URLs act as roadmaps for users and search engines, yet websites constantly implement them poorly. Google explicitly recommends using readable words instead of meaningless ID numbers in URLs to make them understandable to humans. Your URLs should be clear yet concise, instantly signaling what content awaits on the page.

Look at these common URL structure mistakes:

Using underscores instead of hyphens to separate words (Google specifically wants hyphens)

Building overly complex URLs with multiple parameters

Setting up dynamic URLs that generate infinite spaces

Mixing uppercase and lowercase letters inconsistently, creating duplicate content issues

Sticking dates in URLs that unnecessarily age your content

These issues go far beyond looking unprofessional. Those complex URLs with multiple parameters force Googlebot to burn through unnecessary bandwidth or leave parts of your site completely unindexed. Google has confirmed that URL structure directly impacts how well your site gets crawled and indexed.

Ineffective Internal Linking Patterns

Internal links build relationships between content and show Google your website's structure. They create a hierarchy that lets you assign more value to important pages and less to minor ones. This means internal linking patterns fundamentally shape how search engines discover, index, and understand your pages.

Surprisingly, many websites scatter internal links without logic, connecting to irrelevant pages or ones that don't fit contextually. This confuses users and dilutes link value. Too many links on a single page can also prevent search engines from properly crawling all linked content, though large e-commerce sites might handle thousands of links just fine.

Remember this: when links pass value forward, more links to a post mean more value. This is why linking from high-traffic spots like your homepage delivers significantly more benefit than links from rarely-visited pages. Watch out for accidental noindex or nofollow tags in your crawl pattern or robots.txt restrictions that block Google from properly crawling and assigning authority to your pages.

Orphaned Content Pages

Orphaned content represents the forgotten stepchild of seo optimization. These pages have zero internal links pointing to them, making them impossible to find through normal site navigation. They exist outside your site structure, isolated from both users and search algorithms.

The SEO damage? Massive. Without internal links, search engines struggle to find and index these pages. Even if Google eventually discovers an orphan page (maybe through your XML sitemap), it typically ranks poorly because it lacks the internal links that pass authority and relevance signals.

How do orphaned pages happen? Through several common scenarios: poor internal linking practices, sloppy site migrations, deleted links, or regular updates without proper housekeeping. These pages waste your precious crawl budget and create frustrating user experiences when visitors somehow land on them but can't navigate back to your main site.

The solution requires regular site audits to identify orphaned pages. Then you have three options: integrate them into your site structure with relevant internal links, implement 301 redirects to appropriate alternatives, or remove them entirely if they no longer serve a purpose.

Fix these information architecture errors, and your seo friendly website design will provide clear pathways for both users and search engines, boosting your chances of ranking higher in 2025's hyper-competitive search landscape.

Content Layout Mistakes That Reduce SEO Value

Technical SEO and blazing page speed won't save your rankings if you're making crucial content layout mistakes. How your content appears on the page drastically impacts how search engines evaluate and rank your seo website design.

Hidden Content in Tabs and Accordions

Google claims hidden content in tabs and accordions carries equal weight, but real-world results tell a different story. Iceland Groceries saw their organic sessions jump by 12% simply by revealing previously hidden content. Another six-month study discovered Google's algorithms consistently preferred fully visible text over content hidden through CSS or JavaScript.

Accordions might work perfectly for FAQ sections, but hiding essential elements like product descriptions significantly reduces their SEO value. The pattern is clear: visible content outperforms hidden content in search rankings.

Text-to-Code Ratio Imbalance

Ever heard of the text-to-HTML ratio? This measures visible text compared to HTML code on your page. For optimal results, this ratio should fall between 25-70%. Pages with higher text-to-code ratios tend to be more user-friendly and load faster, while low ratios typically signal bloated HTML that slows everything down.

Want to improve this ratio? Try these approaches:

Place CSS and JavaScript in separate files

Remove unnecessary HTML elements

Validate your code

Eliminate commented-out code

While text-to-HTML ratio isn't a direct ranking factor, a better ratio creates cleaner code that helps search engines crawl and index your site more efficiently. Think of it as cleaning up a cluttered room—everything just works better when the mess is gone.

Keyword Cannibalization Through Design

Keyword cannibalization is that awkward moment when multiple pages on your site compete for the same keywords with identical search intent. This common seo optimization mistake leaves search engines confused about which page deserves priority.

Design choices frequently create this problem without you even realizing it through:

Multiple similar pages targeting identical keywords

Content fragmentation across several pages

Duplicative section headings and URL structures

The result? Fragmented page authority and wildly erratic search rankings. You're essentially competing against yourself, splitting your ranking power instead of consolidating it.

The fix isn't complicated: consolidate overlapping content, use canonical tags appropriately, or revamp pages to target different search intents. Sometimes less truly is more when it comes to content organization and SEO effectiveness.

Security Design Flaws That Impact SEO Rankings

Security vulnerabilities lurk in many website designs, silently sabotaging your search rankings. Most site owners focus entirely on content and technical SEO while completely overlooking security factors. As search engines increasingly prioritize user safety, security flaws directly undercut your seo website design effectiveness regardless of other optimization work.

Mixed Content Issues

Mixed content happens when your secure HTTPS page loads resources like images, videos, stylesheets, or scripts over insecure HTTP connections. This creates major security holes that search engines actively penalize. Since December 2019, Google Chrome blocks all mixed content by default, directly affecting both user experience and site accessibility. When visitors see those alarming security warnings due to mixed content, they leave—fast. These skyrocketing bounce rates send powerful negative signals to search algorithms.

The SEO damage runs deep. Just one instance of mixed content can wreck your site's credibility and search engine standing. Those mixed content issues also dilute all the advantages of having an HTTPS site, potentially erasing ranking benefits you'd otherwise enjoy. Even worse, attackers can exploit these insecure requests to replace your images with malicious content or insert clickable ads leading to phishing scams.

Insecure Form Implementations

Those contact, subscription, and checkout forms? If they're collecting visitor information without proper security measures, you're creating serious ranking vulnerabilities. Unencrypted forms send data as plain text, making personal information easy to intercept. Properly encrypted forms using SSL not only protect user data but also satisfy Google's requirements for safe websites—bringing benefits for both user experience and seo optimization.

Security doesn't stop at encryption. Implementing effective spam detection methods like CAPTCHA maintains submission quality. Without these protections, spam can flood your forms, damaging both site reputation and search rankings. Form security also dramatically affects conversion rates—today's users simply won't submit information on sites displaying security warnings.

Missing HTTPS Implementation

HTTPS isn't optional anymore for effective website seo. Google officially confirmed HTTPS as a ranking signal back in 2014, explicitly favoring secure websites in search results. This means non-HTTPS sites face ranking disadvantages regardless of content quality. Chrome now displays attention-grabbing "Not Secure" warnings for all HTTP sites, creating immediate trust issues that send users running.

Beyond rankings, HTTPS improves your analytics by preserving referral data between secure sites. Without it, traffic sources misleadingly appear as "direct," hiding valuable optimization insights. Google Search Console offers special reports and features exclusively for HTTPS sites, making security implementation essential for comprehensive seo optimization strategies.

The message is clear: secure your site or watch your rankings disappear. Security isn't just about protection—it's become a fundamental ranking factor you ignore at your peril.

Final Thoughts on SEO Website Design Success

Website design mistakes silently destroy search rankings for businesses every day. You might have the most amazing content in your industry, but technical issues like JavaScript rendering problems, mobile optimization failures, and sluggish page speed create massive barriers between that content and the search positions you deserve.

Search engines no longer evaluate websites with simple metrics. Google's algorithm has evolved into a sophisticated evaluation system that examines hundreds of factors simultaneously. This means successful SEO demands careful attention to information architecture, content presentation, and security implementations. When was the last time you audited your site for these hidden ranking killers?

Looking ahead to 2025's hyper-competitive search landscape, a piecemeal approach simply won't cut it. The websites that dominate search results will excel across multiple dimensions:

Mobile-first design principles that deliver seamless experiences

Lightning-fast page speeds that keep bounce rates low

Clean, efficient code structures that search engines love to crawl

Rock-solid security measures that protect users and earn trust

These elements don't work in isolation—they function as an interconnected system. A beautifully designed site with security vulnerabilities will fail. A secure site with terrible page speed will struggle. A fast site with poor mobile experience won't compete.

I've seen countless businesses invest thousands in content creation while completely ignoring these fundamental design issues. The result? Content that never reaches its audience. Proper SEO website design marries technical excellence with strategic content presentation, creating a foundation that supports everything else you do.

Start by tackling the issues outlined in this guide. Each fix brings you closer to better visibility and superior user experiences. Then commit to regular testing and optimization as search algorithms continue to evolve. Remember, SEO isn't a one-time project—it's an ongoing process of refinement that rewards the vigilant and punishes the complacent.

What design issues will you fix first?